How MCP is changing the AI agents ecosystem

TL;DR: Model Context Protocol (MCP) decouples an agent’s intelligence (chat/reasoning loop) from its tools (connectors that read data or take actions). With a common, open interface, any capable AI can discover and call any MCP-compatible tool. This unlocks consumer client consolidation, composability for enterprise AI deployments, and a new distribution channel for developers.

We've been building AI agents and seeing how big of an impact Model Context Protocol (MCP) has had on the ecosystem. Here's what we think the implications of MCP are.

Agents were closed systems, until now

An AI agent has two key components: (a) intelligence: an interface and an agentic chat loop and (b) integrations to tools. For example, Google Gemini has a chat interface and tool integrations to the Google Workspace suite.

The adoption of MCP is now enabling the two pieces to be composable. Previously, each agent was a closed system - there was no common way to extend the tools available for AI platforms (ChatGPT, Claude, Gemini, and others). Despite OpenAI's GPTs supporting custom actions, it was hard to use for regular users and had its own specification on how it works.

MCP has created a space where builders can agree upon the common interface for how AI and tools interoperate. This decouples the stack: the rapid adoption of MCP means you can start to mix and match your favorite AI with the apps and tools you use. This provides users, builders, and enterprises new options.

More choices for consumers

For users, MCP adoption has made it possible for them to use more tools with their favorite AI. You can stay in your favorite AI tool (Claude, Cursor, Windsurf, ChatGPT, Gemini) and have the AI pull in data and take actions in the other systems you use.

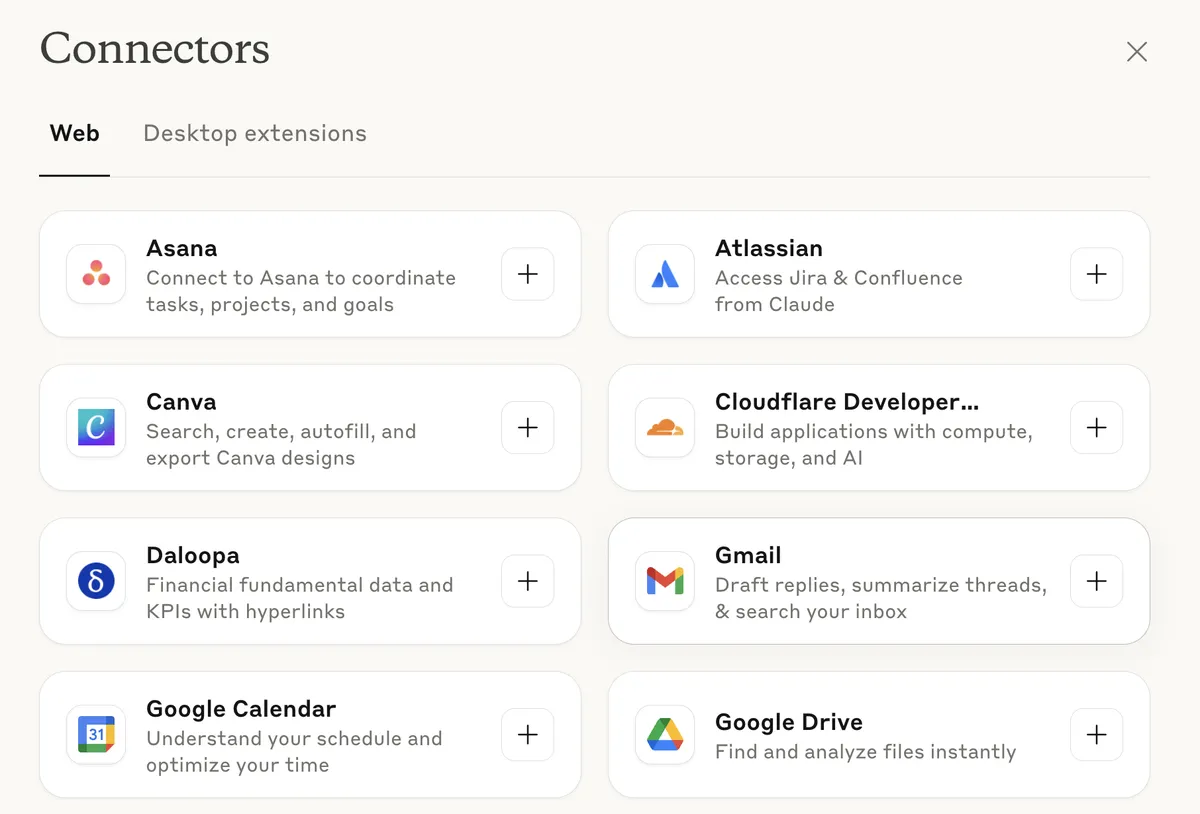

Claude connectors include engineering, PM, design, and even finance tools.

Claude connectors include engineering, PM, design, and even finance tools.

Prediction: Consumers will start consolidating their AI clients. Once your favorite AI client starts to work well with your existing apps, consumers will want fewer not more surfaces to log in to. The frontier AI systems are converging on capabilities, and instead of buying subscriptions to multiple AIs, consumers will start to buy the highest "Max" plans of their favorite AI client. Agentic AI that reasons across many tools only work well with the largest reasoning models, and you'd want the highest plans for that.

A composable AI stack for Enterprises

For enterprises, you can now build & buy intelligence separate from tools. This composability makes it easier to evolve your AI infrastructure since you can now swap out compatible component pieces. It also reduces the risk of being overly dependent on a single system. This makes it a ripe time to invest into a custom MCP tool layer for your company, since MCP provides a clear story on ecosystem compatibility.

Prediction: Each team will have their favorite AI system that's optimized for their workflows, but they can now interoperate across more data & apps in the Enterprise to get more done. Enterprises will start investing into managing access to data & apps, adopting MCP infra that builds in security, governance, and compliance. Enterprise MCP platforms will enable you to have a single control plane to determine what all the deployed AIs can access.

Reusabilty for developers

For developers, previously we had to develop separate integrations for each tool, manage authentication, function calls, and more. While this may have made sense for first-party data in apps, users often want to retrieve data from adjacent services. MCP has now made it easier to re-use connectors from the community. There's a large number of MCP connectors we can use out of the box, and even hosted options (Composio, Pipedream, Glama, Smithery) that can be tapped on.

Prediction: We'll see beginnings of MCP-first startups. MCP opens up a new distribution channel for everyone, your users can benefit from your product via any AI platform.

State of MCP support

Support for MCP is early, but fast developing. Right now, ChatGPT only has partial support for MCP: You can connect MCPs to ChatGPT connectors, but those MCPs must only two tools: search and fetch. Also, they only work in deep research. As a developer, you can use MCP with the API and that supports the full set of MCP tools; all tools become available; this shows in the dev playground.

For Custom GPTs, they support any action, but not MCPs. So if you had a layer to translate MCP to their API spec, it will work. However, Custom GPTs with actions only support models 4o and 4.1; so you don't get the benefit of the o-series of models.

Gemini does not support MCP yet, and Claude has the best support.

Interoperability opens up new possibilities

One trend we’re seeing is that the leading agents are starting to look much more general-purpose, even though they started off being vertically oriented.

Claude Code has taken off with engineers, but when you add custom tools, it can do much more. I've been experimenting with Claude Code as a light-weight CRM tool (by asking it maintain md files per customer). Anthropic's has many teams using Claude code, including growth marketing, design, and even legal.

The team realized that the ability to make AI tools – the modular capabilities used by agents to gather information or take actions – “plug-and-play” was the missing piece for enterprise-scale agent development. Tech At Bloomberg

As AI models get more powerful, the main unlock is now with what tools they can access, and MCP is helping to set the stage for it.

If you’re exploring MCP in production, or building your enterprise control plane, I’d love to compare notes - reach out here.